Probability distribution

Scaled inverse chi-squared

Probability density function

Cumulative distribution function

Parameters

ν

>

0

{\displaystyle \nu >0\,}

τ

2

>

0

{\displaystyle \tau ^{2}>0\,}

Support

x

∈

(

0

,

∞

)

{\displaystyle x\in (0,\infty )}

PDF

(

τ

2

ν

/

2

)

ν

/

2

Γ

(

ν

/

2

)

exp

[

−

ν

τ

2

2

x

]

x

1

+

ν

/

2

{\displaystyle {\frac {(\tau ^{2}\nu /2)^{\nu /2}}{\Gamma (\nu /2)}}~{\frac {\exp \left[{\frac {-\nu \tau ^{2}}{2x}}\right]}{x^{1+\nu /2}}}}

CDF

Γ

(

ν

2

,

τ

2

ν

2

x

)

/

Γ

(

ν

2

)

{\displaystyle \Gamma \left({\frac {\nu }{2}},{\frac {\tau ^{2}\nu }{2x}}\right)\left/\Gamma \left({\frac {\nu }{2}}\right)\right.}

Mean

ν

τ

2

ν

−

2

{\displaystyle {\frac {\nu \tau ^{2}}{\nu -2}}}

ν

>

2

{\displaystyle \nu >2\,}

Mode

ν

τ

2

ν

+

2

{\displaystyle {\frac {\nu \tau ^{2}}{\nu +2}}}

Variance

2

ν

2

τ

4

(

ν

−

2

)

2

(

ν

−

4

)

{\displaystyle {\frac {2\nu ^{2}\tau ^{4}}{(\nu -2)^{2}(\nu -4)}}}

ν

>

4

{\displaystyle \nu >4\,}

Skewness

4

ν

−

6

2

(

ν

−

4

)

{\displaystyle {\frac {4}{\nu -6}}{\sqrt {2(\nu -4)}}}

ν

>

6

{\displaystyle \nu >6\,}

Excess kurtosis

12

(

5

ν

−

22

)

(

ν

−

6

)

(

ν

−

8

)

{\displaystyle {\frac {12(5\nu -22)}{(\nu -6)(\nu -8)}}}

ν

>

8

{\displaystyle \nu >8\,}

Entropy

ν

2

+

ln

(

τ

2

ν

2

Γ

(

ν

2

)

)

{\displaystyle {\frac {\nu }{2}}\!+\!\ln \left({\frac {\tau ^{2}\nu }{2}}\Gamma \left({\frac {\nu }{2}}\right)\right)}

−

(

1

+

ν

2

)

ψ

(

ν

2

)

{\displaystyle \!-\!\left(1\!+\!{\frac {\nu }{2}}\right)\psi \left({\frac {\nu }{2}}\right)}

MGF

2

Γ

(

ν

2

)

(

−

τ

2

ν

t

2

)

ν

4

K

ν

2

(

−

2

τ

2

ν

t

)

{\displaystyle {\frac {2}{\Gamma ({\frac {\nu }{2}})}}\left({\frac {-\tau ^{2}\nu t}{2}}\right)^{\!\!{\frac {\nu }{4}}}\!\!K_{\frac {\nu }{2}}\left({\sqrt {-2\tau ^{2}\nu t}}\right)}

CF

2

Γ

(

ν

2

)

(

−

i

τ

2

ν

t

2

)

ν

4

K

ν

2

(

−

2

i

τ

2

ν

t

)

{\displaystyle {\frac {2}{\Gamma ({\frac {\nu }{2}})}}\left({\frac {-i\tau ^{2}\nu t}{2}}\right)^{\!\!{\frac {\nu }{4}}}\!\!K_{\frac {\nu }{2}}\left({\sqrt {-2i\tau ^{2}\nu t}}\right)}

The scaled inverse chi-squared distribution

ψ

inv-

χ

2

(

ν

)

{\displaystyle \psi \,{\mbox{inv-}}\chi ^{2}(\nu )}

ψ

{\displaystyle \psi }

inverse Wishart distribution

W

−

1

(

ψ

,

ν

)

{\displaystyle {\mathcal {W}}^{-1}(\psi ,\nu )}

ν

{\displaystyle \nu }

This family of scaled inverse chi-squared distributions is linked to the inverse-chi-squared distribution and to the chi-squared distribution :

If

X

∼

ψ

inv-

χ

2

(

ν

)

{\displaystyle X\sim \psi \,{\mbox{inv-}}\chi ^{2}(\nu )}

X

/

ψ

∼

inv-

χ

2

(

ν

)

{\displaystyle X/\psi \sim {\mbox{inv-}}\chi ^{2}(\nu )}

ψ

/

X

∼

χ

2

(

ν

)

{\displaystyle \psi /X\sim \chi ^{2}(\nu )}

1

/

X

∼

ψ

−

1

χ

2

(

ν

)

{\displaystyle 1/X\sim \psi ^{-1}\chi ^{2}(\nu )}

Instead of

ψ

{\displaystyle \psi }

τ

2

=

ψ

/

ν

{\displaystyle \tau ^{2}=\psi /\nu }

ν

τ

2

inv-

χ

2

(

ν

)

{\displaystyle \nu \tau ^{2}\,{\mbox{inv-}}\chi ^{2}(\nu )}

Scale-inv-

χ

2

(

ν

,

τ

2

)

{\displaystyle {\mbox{Scale-inv-}}\chi ^{2}(\nu ,\tau ^{2})}

τ

2

{\displaystyle \tau ^{2}}

If

X

∼

Scale-inv-

χ

2

(

ν

,

τ

2

)

{\displaystyle X\sim {\mbox{Scale-inv-}}\chi ^{2}(\nu ,\tau ^{2})}

X

ν

τ

2

∼

inv-

χ

2

(

ν

)

{\displaystyle {\frac {X}{\nu \tau ^{2}}}\sim {\mbox{inv-}}\chi ^{2}(\nu )}

ν

τ

2

X

∼

χ

2

(

ν

)

{\displaystyle {\frac {\nu \tau ^{2}}{X}}\sim \chi ^{2}(\nu )}

1

/

X

∼

1

ν

τ

2

χ

2

(

ν

)

{\displaystyle 1/X\sim {\frac {1}{\nu \tau ^{2}}}\chi ^{2}(\nu )}

This family of scaled inverse chi-squared distributions is a reparametrization of the inverse-gamma distribution .

Specifically, if

X

∼

ψ

inv-

χ

2

(

ν

)

=

Scale-inv-

χ

2

(

ν

,

τ

2

)

{\displaystyle X\sim \psi \,{\mbox{inv-}}\chi ^{2}(\nu )={\mbox{Scale-inv-}}\chi ^{2}(\nu ,\tau ^{2})}

X

∼

Inv-Gamma

(

ν

2

,

ψ

2

)

=

Inv-Gamma

(

ν

2

,

ν

τ

2

2

)

{\displaystyle X\sim {\textrm {Inv-Gamma}}\left({\frac {\nu }{2}},{\frac {\psi }{2}}\right)={\textrm {Inv-Gamma}}\left({\frac {\nu }{2}},{\frac {\nu \tau ^{2}}{2}}\right)}

Either form may be used to represent the maximum entropy distribution for a fixed first inverse moment

(

E

(

1

/

X

)

)

{\displaystyle (E(1/X))}

(

E

(

ln

(

X

)

)

{\displaystyle (E(\ln(X))}

The scaled inverse chi-squared distribution also has a particular use in Bayesian statistics . Specifically, the scaled inverse chi-squared distribution can be used as a conjugate prior for the variance parameter of a normal distribution .

The same prior in alternative parametrization is given by

the inverse-gamma distribution .

The probability density function of the scaled inverse chi-squared distribution extends over the domain

x

>

0

{\displaystyle x>0}

f

(

x

;

ν

,

τ

2

)

=

(

τ

2

ν

/

2

)

ν

/

2

Γ

(

ν

/

2

)

exp

[

−

ν

τ

2

2

x

]

x

1

+

ν

/

2

{\displaystyle f(x;\nu ,\tau ^{2})={\frac {(\tau ^{2}\nu /2)^{\nu /2}}{\Gamma (\nu /2)}}~{\frac {\exp \left[{\frac {-\nu \tau ^{2}}{2x}}\right]}{x^{1+\nu /2}}}}

where

ν

{\displaystyle \nu }

degrees of freedom parameter and

τ

2

{\displaystyle \tau ^{2}}

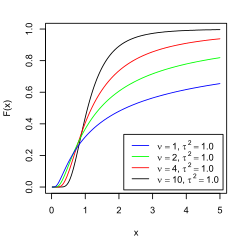

scale parameter . The cumulative distribution function is

F

(

x

;

ν

,

τ

2

)

=

Γ

(

ν

2

,

τ

2

ν

2

x

)

/

Γ

(

ν

2

)

{\displaystyle F(x;\nu ,\tau ^{2})=\Gamma \left({\frac {\nu }{2}},{\frac {\tau ^{2}\nu }{2x}}\right)\left/\Gamma \left({\frac {\nu }{2}}\right)\right.}

=

Q

(

ν

2

,

τ

2

ν

2

x

)

{\displaystyle =Q\left({\frac {\nu }{2}},{\frac {\tau ^{2}\nu }{2x}}\right)}

where

Γ

(

a

,

x

)

{\displaystyle \Gamma (a,x)}

incomplete gamma function ,

Γ

(

x

)

{\displaystyle \Gamma (x)}

gamma function and

Q

(

a

,

x

)

{\displaystyle Q(a,x)}

regularized gamma function . The characteristic function is

φ

(

t

;

ν

,

τ

2

)

=

{\displaystyle \varphi (t;\nu ,\tau ^{2})=}

2

Γ

(

ν

2

)

(

−

i

τ

2

ν

t

2

)

ν

4

K

ν

2

(

−

2

i

τ

2

ν

t

)

,

{\displaystyle {\frac {2}{\Gamma ({\frac {\nu }{2}})}}\left({\frac {-i\tau ^{2}\nu t}{2}}\right)^{\!\!{\frac {\nu }{4}}}\!\!K_{\frac {\nu }{2}}\left({\sqrt {-2i\tau ^{2}\nu t}}\right),}

where

K

ν

2

(

z

)

{\displaystyle K_{\frac {\nu }{2}}(z)}

Bessel function of the second kind .

Parameter estimation [ edit ] The maximum likelihood estimate of

τ

2

{\displaystyle \tau ^{2}}

τ

2

=

n

/

∑

i

=

1

n

1

x

i

.

{\displaystyle \tau ^{2}=n/\sum _{i=1}^{n}{\frac {1}{x_{i}}}.}

The maximum likelihood estimate of

ν

2

{\displaystyle {\frac {\nu }{2}}}

Newton's method on:

ln

(

ν

2

)

−

ψ

(

ν

2

)

=

1

n

∑

i

=

1

n

ln

(

x

i

)

−

ln

(

τ

2

)

,

{\displaystyle \ln \left({\frac {\nu }{2}}\right)-\psi \left({\frac {\nu }{2}}\right)={\frac {1}{n}}\sum _{i=1}^{n}\ln \left(x_{i}\right)-\ln \left(\tau ^{2}\right),}

where

ψ

(

x

)

{\displaystyle \psi (x)}

digamma function . An initial estimate can be found by taking the formula for mean and solving it for

ν

.

{\displaystyle \nu .}

x

¯

=

1

n

∑

i

=

1

n

x

i

{\displaystyle {\bar {x}}={\frac {1}{n}}\sum _{i=1}^{n}x_{i}}

ν

{\displaystyle \nu }

ν

2

=

x

¯

x

¯

−

τ

2

.

{\displaystyle {\frac {\nu }{2}}={\frac {\bar {x}}{{\bar {x}}-\tau ^{2}}}.}

Bayesian estimation of the variance of a normal distribution [ edit ] The scaled inverse chi-squared distribution has a second important application, in the Bayesian estimation of the variance of a Normal distribution.

According to Bayes' theorem , the posterior probability distribution for quantities of interest is proportional to the product of a prior distribution for the quantities and a likelihood function :

p

(

σ

2

|

D

,

I

)

∝

p

(

σ

2

|

I

)

p

(

D

|

σ

2

)

{\displaystyle p(\sigma ^{2}|D,I)\propto p(\sigma ^{2}|I)\;p(D|\sigma ^{2})}

where D represents the data and I represents any initial information about σ2 that we may already have.

The simplest scenario arises if the mean μ is already known; or, alternatively, if it is the conditional distribution of σ2 that is sought, for a particular assumed value of μ.

Then the likelihood term L (σ2 |D ) = p (D |σ2 ) has the familiar form

L

(

σ

2

|

D

,

μ

)

=

1

(

2

π

σ

)

n

exp

[

−

∑

i

n

(

x

i

−

μ

)

2

2

σ

2

]

{\displaystyle {\mathcal {L}}(\sigma ^{2}|D,\mu )={\frac {1}{\left({\sqrt {2\pi }}\sigma \right)^{n}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-\mu )^{2}}{2\sigma ^{2}}}\right]}

Combining this with the rescaling-invariant prior p(σ2 |I ) = 1/σ2 , which can be argued (e.g. following Jeffreys ) to be the least informative possible prior for σ2 in this problem, gives a combined posterior probability

p

(

σ

2

|

D

,

I

,

μ

)

∝

1

σ

n

+

2

exp

[

−

∑

i

n

(

x

i

−

μ

)

2

2

σ

2

]

{\displaystyle p(\sigma ^{2}|D,I,\mu )\propto {\frac {1}{\sigma ^{n+2}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-\mu )^{2}}{2\sigma ^{2}}}\right]}

This form can be recognised as that of a scaled inverse chi-squared distribution, with parameters ν = n and τ2 = s 2 = (1/n ) Σ (xi -μ)2

Gelman et al remark that the re-appearance of this distribution, previously seen in a sampling context, may seem remarkable; but given the choice of prior the "result is not surprising".[1]

In particular, the choice of a rescaling-invariant prior for σ2 has the result that the probability for the ratio of σ2 / s 2 has the same form (independent of the conditioning variable) when conditioned on s 2 as when conditioned on σ2 :

p

(

σ

2

s

2

|

s

2

)

=

p

(

σ

2

s

2

|

σ

2

)

{\displaystyle p({\tfrac {\sigma ^{2}}{s^{2}}}|s^{2})=p({\tfrac {\sigma ^{2}}{s^{2}}}|\sigma ^{2})}

In the sampling-theory case, conditioned on σ2 , the probability distribution for (1/s2 ) is a scaled inverse chi-squared distribution; and so the probability distribution for σ2 conditioned on s 2 , given a scale-agnostic prior, is also a scaled inverse chi-squared distribution.

If more is known about the possible values of σ2 , a distribution from the scaled inverse chi-squared family, such as Scale-inv-χ2 (n 0 , s 0 2 ) can be a convenient form to represent a more informative prior for σ2 , as if from the result of n 0 previous observations (though n 0 need not necessarily be a whole number):

p

(

σ

2

|

I

′

,

μ

)

∝

1

σ

n

0

+

2

exp

[

−

n

0

s

0

2

2

σ

2

]

{\displaystyle p(\sigma ^{2}|I^{\prime },\mu )\propto {\frac {1}{\sigma ^{n_{0}+2}}}\;\exp \left[-{\frac {n_{0}s_{0}^{2}}{2\sigma ^{2}}}\right]}

Such a prior would lead to the posterior distribution

p

(

σ

2

|

D

,

I

′

,

μ

)

∝

1

σ

n

+

n

0

+

2

exp

[

−

n

s

2

+

n

0

s

0

2

2

σ

2

]

{\displaystyle p(\sigma ^{2}|D,I^{\prime },\mu )\propto {\frac {1}{\sigma ^{n+n_{0}+2}}}\;\exp \left[-{\frac {ns^{2}+n_{0}s_{0}^{2}}{2\sigma ^{2}}}\right]}

which is itself a scaled inverse chi-squared distribution. The scaled inverse chi-squared distributions are thus a convenient conjugate prior family for σ2 estimation.

Estimation of variance when mean is unknown [ edit ] If the mean is not known, the most uninformative prior that can be taken for it is arguably the translation-invariant prior p (μ|I ) ∝ const., which gives the following joint posterior distribution for μ and σ2 ,

p

(

μ

,

σ

2

∣

D

,

I

)

∝

1

σ

n

+

2

exp

[

−

∑

i

n

(

x

i

−

μ

)

2

2

σ

2

]

=

1

σ

n

+

2

exp

[

−

∑

i

n

(

x

i

−

x

¯

)

2

2

σ

2

]

exp

[

−

n

(

μ

−

x

¯

)

2

2

σ

2

]

{\displaystyle {\begin{aligned}p(\mu ,\sigma ^{2}\mid D,I)&\propto {\frac {1}{\sigma ^{n+2}}}\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-\mu )^{2}}{2\sigma ^{2}}}\right]\\&={\frac {1}{\sigma ^{n+2}}}\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\exp \left[-{\frac {n(\mu -{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\end{aligned}}}

The marginal posterior distribution for σ2 is obtained from the joint posterior distribution by integrating out over μ,

p

(

σ

2

|

D

,

I

)

∝

1

σ

n

+

2

exp

[

−

∑

i

n

(

x

i

−

x

¯

)

2

2

σ

2

]

∫

−

∞

∞

exp

[

−

n

(

μ

−

x

¯

)

2

2

σ

2

]

d

μ

=

1

σ

n

+

2

exp

[

−

∑

i

n

(

x

i

−

x

¯

)

2

2

σ

2

]

2

π

σ

2

/

n

∝

(

σ

2

)

−

(

n

+

1

)

/

2

exp

[

−

(

n

−

1

)

s

2

2

σ

2

]

{\displaystyle {\begin{aligned}p(\sigma ^{2}|D,I)\;\propto \;&{\frac {1}{\sigma ^{n+2}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\;\int _{-\infty }^{\infty }\exp \left[-{\frac {n(\mu -{\bar {x}})^{2}}{2\sigma ^{2}}}\right]d\mu \\=\;&{\frac {1}{\sigma ^{n+2}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\;{\sqrt {2\pi \sigma ^{2}/n}}\\\propto \;&(\sigma ^{2})^{-(n+1)/2}\;\exp \left[-{\frac {(n-1)s^{2}}{2\sigma ^{2}}}\right]\end{aligned}}}

This is again a scaled inverse chi-squared distribution, with parameters

n

−

1

{\displaystyle \scriptstyle {n-1}\;}

s

2

=

∑

(

x

i

−

x

¯

)

2

/

(

n

−

1

)

{\displaystyle \scriptstyle {s^{2}=\sum (x_{i}-{\bar {x}})^{2}/(n-1)}}

If

X

∼

Scale-inv-

χ

2

(

ν

,

τ

2

)

{\displaystyle X\sim {\mbox{Scale-inv-}}\chi ^{2}(\nu ,\tau ^{2})}

k

X

∼

Scale-inv-

χ

2

(

ν

,

k

τ

2

)

{\displaystyle kX\sim {\mbox{Scale-inv-}}\chi ^{2}(\nu ,k\tau ^{2})\,}

If

X

∼

inv-

χ

2

(

ν

)

{\displaystyle X\sim {\mbox{inv-}}\chi ^{2}(\nu )\,}

Inverse-chi-squared distribution ) then

X

∼

Scale-inv-

χ

2

(

ν

,

1

/

ν

)

{\displaystyle X\sim {\mbox{Scale-inv-}}\chi ^{2}(\nu ,1/\nu )\,}

If

X

∼

Scale-inv-

χ

2

(

ν

,

τ

2

)

{\displaystyle X\sim {\mbox{Scale-inv-}}\chi ^{2}(\nu ,\tau ^{2})}

X

τ

2

ν

∼

inv-

χ

2

(

ν

)

{\displaystyle {\frac {X}{\tau ^{2}\nu }}\sim {\mbox{inv-}}\chi ^{2}(\nu )\,}

Inverse-chi-squared distribution )

If

X

∼

Scale-inv-

χ

2

(

ν

,

τ

2

)

{\displaystyle X\sim {\mbox{Scale-inv-}}\chi ^{2}(\nu ,\tau ^{2})}

X

∼

Inv-Gamma

(

ν

2

,

ν

τ

2

2

)

{\displaystyle X\sim {\textrm {Inv-Gamma}}\left({\frac {\nu }{2}},{\frac {\nu \tau ^{2}}{2}}\right)}

Inverse-gamma distribution )

Scaled inverse chi square distribution is a special case of type 5 Pearson distribution Gelman A. et al (1995), Bayesian Data Analysis , pp 474–475; also pp 47, 480

^ Gelman et al (1995), Bayesian Data Analysis (1st ed), p.68

Discrete

with finite with infinite

Continuous

supported on a supported on a supported with support

Mixed

Multivariate Directional Degenerate singular Families

![{\displaystyle {\frac {(\tau ^{2}\nu /2)^{\nu /2}}{\Gamma (\nu /2)}}~{\frac {\exp \left[{\frac {-\nu \tau ^{2}}{2x}}\right]}{x^{1+\nu /2}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a0745f89b0b5a5ae479cba30f5cbe929d5dfe6c4)

![{\displaystyle f(x;\nu ,\tau ^{2})={\frac {(\tau ^{2}\nu /2)^{\nu /2}}{\Gamma (\nu /2)}}~{\frac {\exp \left[{\frac {-\nu \tau ^{2}}{2x}}\right]}{x^{1+\nu /2}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e1bf27f69f750f896de47bdcd485b9ecba90b361)

![{\displaystyle {\mathcal {L}}(\sigma ^{2}|D,\mu )={\frac {1}{\left({\sqrt {2\pi }}\sigma \right)^{n}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-\mu )^{2}}{2\sigma ^{2}}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4943ac8fdd3af8089ce64ae432297094ee8b0bc2)

![{\displaystyle p(\sigma ^{2}|D,I,\mu )\propto {\frac {1}{\sigma ^{n+2}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-\mu )^{2}}{2\sigma ^{2}}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c2f59d780af470614405f6ff518ebca3b00aede4)

![{\displaystyle p(\sigma ^{2}|I^{\prime },\mu )\propto {\frac {1}{\sigma ^{n_{0}+2}}}\;\exp \left[-{\frac {n_{0}s_{0}^{2}}{2\sigma ^{2}}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bd531671f3b283268de8d05dab1a5b22315e5328)

![{\displaystyle p(\sigma ^{2}|D,I^{\prime },\mu )\propto {\frac {1}{\sigma ^{n+n_{0}+2}}}\;\exp \left[-{\frac {ns^{2}+n_{0}s_{0}^{2}}{2\sigma ^{2}}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/50d412a06cd622273c7353e2e1ff9d7a01376f64)

![{\displaystyle {\begin{aligned}p(\mu ,\sigma ^{2}\mid D,I)&\propto {\frac {1}{\sigma ^{n+2}}}\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-\mu )^{2}}{2\sigma ^{2}}}\right]\\&={\frac {1}{\sigma ^{n+2}}}\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\exp \left[-{\frac {n(\mu -{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c192479aa55c83f05982b775313f9d430eb78272)

![{\displaystyle {\begin{aligned}p(\sigma ^{2}|D,I)\;\propto \;&{\frac {1}{\sigma ^{n+2}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\;\int _{-\infty }^{\infty }\exp \left[-{\frac {n(\mu -{\bar {x}})^{2}}{2\sigma ^{2}}}\right]d\mu \\=\;&{\frac {1}{\sigma ^{n+2}}}\;\exp \left[-{\frac {\sum _{i}^{n}(x_{i}-{\bar {x}})^{2}}{2\sigma ^{2}}}\right]\;{\sqrt {2\pi \sigma ^{2}/n}}\\\propto \;&(\sigma ^{2})^{-(n+1)/2}\;\exp \left[-{\frac {(n-1)s^{2}}{2\sigma ^{2}}}\right]\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7abc48680dfd62bf653242c81857f3d9c0d7d4d2)