normal-inverse-gamma

Probability density function

Parameters

μ

{\displaystyle \mu \,}

location (real )

λ

>

0

{\displaystyle \lambda >0\,}

α

>

0

{\displaystyle \alpha >0\,}

β

>

0

{\displaystyle \beta >0\,}

Support

x

∈

(

−

∞

,

∞

)

,

σ

2

∈

(

0

,

∞

)

{\displaystyle x\in (-\infty ,\infty )\,\!,\;\sigma ^{2}\in (0,\infty )}

PDF

λ

2

π

σ

2

β

α

Γ

(

α

)

(

1

σ

2

)

α

+

1

exp

(

−

2

β

+

λ

(

x

−

μ

)

2

2

σ

2

)

{\displaystyle {\frac {\sqrt {\lambda }}{\sqrt {2\pi \sigma ^{2}}}}{\frac {\beta ^{\alpha }}{\Gamma (\alpha )}}\left({\frac {1}{\sigma ^{2}}}\right)^{\alpha +1}\exp \left(-{\frac {2\beta +\lambda (x-\mu )^{2}}{2\sigma ^{2}}}\right)}

Mean

E

[

x

]

=

μ

{\displaystyle \operatorname {E} [x]=\mu }

E

[

σ

2

]

=

β

α

−

1

{\displaystyle \operatorname {E} [\sigma ^{2}]={\frac {\beta }{\alpha -1}}}

α

>

1

{\displaystyle \alpha >1}

Mode

x

=

μ

(univariate)

,

x

=

μ

(multivariate)

{\displaystyle x=\mu \;{\textrm {(univariate)}},x={\boldsymbol {\mu }}\;{\textrm {(multivariate)}}}

σ

2

=

β

α

+

1

+

1

/

2

(univariate)

,

σ

2

=

β

α

+

1

+

k

/

2

(multivariate)

{\displaystyle \sigma ^{2}={\frac {\beta }{\alpha +1+1/2}}\;{\textrm {(univariate)}},\sigma ^{2}={\frac {\beta }{\alpha +1+k/2}}\;{\textrm {(multivariate)}}}

Variance

Var

[

x

]

=

β

(

α

−

1

)

λ

{\displaystyle \operatorname {Var} [x]={\frac {\beta }{(\alpha -1)\lambda }}}

α

>

1

{\displaystyle \alpha >1}

Var

[

σ

2

]

=

β

2

(

α

−

1

)

2

(

α

−

2

)

{\displaystyle \operatorname {Var} [\sigma ^{2}]={\frac {\beta ^{2}}{(\alpha -1)^{2}(\alpha -2)}}}

α

>

2

{\displaystyle \alpha >2}

Cov

[

x

,

σ

2

]

=

0

{\displaystyle \operatorname {Cov} [x,\sigma ^{2}]=0}

α

>

1

{\displaystyle \alpha >1}

In probability theory and statistics , the normal-inverse-gamma distribution (or Gaussian-inverse-gamma distribution ) is a four-parameter family of multivariate continuous probability distributions . It is the conjugate prior of a normal distribution with unknown mean and variance .

Suppose

x

∣

σ

2

,

μ

,

λ

∼

N

(

μ

,

σ

2

/

λ

)

{\displaystyle x\mid \sigma ^{2},\mu ,\lambda \sim \mathrm {N} (\mu ,\sigma ^{2}/\lambda )\,\!}

has a normal distribution with mean

μ

{\displaystyle \mu }

variance

σ

2

/

λ

{\displaystyle \sigma ^{2}/\lambda }

σ

2

∣

α

,

β

∼

Γ

−

1

(

α

,

β

)

{\displaystyle \sigma ^{2}\mid \alpha ,\beta \sim \Gamma ^{-1}(\alpha ,\beta )\!}

has an inverse-gamma distribution . Then

(

x

,

σ

2

)

{\displaystyle (x,\sigma ^{2})}

(

x

,

σ

2

)

∼

N-

Γ

−

1

(

μ

,

λ

,

α

,

β

)

.

{\displaystyle (x,\sigma ^{2})\sim {\text{N-}}\Gamma ^{-1}(\mu ,\lambda ,\alpha ,\beta )\!.}

(

NIG

{\displaystyle {\text{NIG}}}

N-

Γ

−

1

.

{\displaystyle {\text{N-}}\Gamma ^{-1}.}

The normal-inverse-Wishart distribution is a generalization of the normal-inverse-gamma distribution that is defined over multivariate random variables.

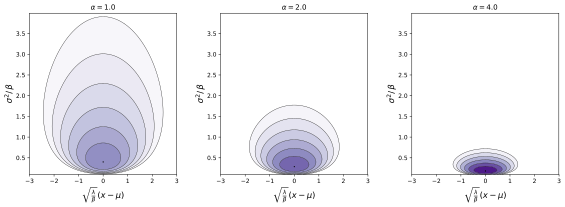

Probability density function [ edit ]

f

(

x

,

σ

2

∣

μ

,

λ

,

α

,

β

)

=

λ

σ

2

π

β

α

Γ

(

α

)

(

1

σ

2

)

α

+

1

exp

(

−

2

β

+

λ

(

x

−

μ

)

2

2

σ

2

)

{\displaystyle f(x,\sigma ^{2}\mid \mu ,\lambda ,\alpha ,\beta )={\frac {\sqrt {\lambda }}{\sigma {\sqrt {2\pi }}}}\,{\frac {\beta ^{\alpha }}{\Gamma (\alpha )}}\,\left({\frac {1}{\sigma ^{2}}}\right)^{\alpha +1}\exp \left(-{\frac {2\beta +\lambda (x-\mu )^{2}}{2\sigma ^{2}}}\right)}

For the multivariate form where

x

{\displaystyle \mathbf {x} }

k

×

1

{\displaystyle k\times 1}

f

(

x

,

σ

2

∣

μ

,

V

−

1

,

α

,

β

)

=

|

V

|

−

1

/

2

(

2

π

)

−

k

/

2

β

α

Γ

(

α

)

(

1

σ

2

)

α

+

1

+

k

/

2

exp

(

−

2

β

+

(

x

−

μ

)

T

V

−

1

(

x

−

μ

)

2

σ

2

)

.

{\displaystyle f(\mathbf {x} ,\sigma ^{2}\mid \mu ,\mathbf {V} ^{-1},\alpha ,\beta )=|\mathbf {V} |^{-1/2}{(2\pi )^{-k/2}}\,{\frac {\beta ^{\alpha }}{\Gamma (\alpha )}}\,\left({\frac {1}{\sigma ^{2}}}\right)^{\alpha +1+k/2}\exp \left(-{\frac {2\beta +(\mathbf {x} -{\boldsymbol {\mu }})^{T}\mathbf {V} ^{-1}(\mathbf {x} -{\boldsymbol {\mu }})}{2\sigma ^{2}}}\right).}

where

|

V

|

{\displaystyle |\mathbf {V} |}

determinant of the

k

×

k

{\displaystyle k\times k}

matrix

V

{\displaystyle \mathbf {V} }

k

=

1

{\displaystyle k=1}

x

,

V

,

μ

{\displaystyle \mathbf {x} ,\mathbf {V} ,{\boldsymbol {\mu }}}

scalars .

Alternative parameterization [ edit ] It is also possible to let

γ

=

1

/

λ

{\displaystyle \gamma =1/\lambda }

f

(

x

,

σ

2

∣

μ

,

γ

,

α

,

β

)

=

1

σ

2

π

γ

β

α

Γ

(

α

)

(

1

σ

2

)

α

+

1

exp

(

−

2

γ

β

+

(

x

−

μ

)

2

2

γ

σ

2

)

{\displaystyle f(x,\sigma ^{2}\mid \mu ,\gamma ,\alpha ,\beta )={\frac {1}{\sigma {\sqrt {2\pi \gamma }}}}\,{\frac {\beta ^{\alpha }}{\Gamma (\alpha )}}\,\left({\frac {1}{\sigma ^{2}}}\right)^{\alpha +1}\exp \left(-{\frac {2\gamma \beta +(x-\mu )^{2}}{2\gamma \sigma ^{2}}}\right)}

In the multivariate form, the corresponding change would be to regard the covariance matrix

V

{\displaystyle \mathbf {V} }

inverse

V

−

1

{\displaystyle \mathbf {V} ^{-1}}

Cumulative distribution function [ edit ]

F

(

x

,

σ

2

∣

μ

,

λ

,

α

,

β

)

=

e

−

β

σ

2

(

β

σ

2

)

α

(

erf

(

λ

(

x

−

μ

)

2

σ

)

+

1

)

2

σ

2

Γ

(

α

)

{\displaystyle F(x,\sigma ^{2}\mid \mu ,\lambda ,\alpha ,\beta )={\frac {e^{-{\frac {\beta }{\sigma ^{2}}}}\left({\frac {\beta }{\sigma ^{2}}}\right)^{\alpha }\left(\operatorname {erf} \left({\frac {{\sqrt {\lambda }}(x-\mu )}{{\sqrt {2}}\sigma }}\right)+1\right)}{2\sigma ^{2}\Gamma (\alpha )}}}

Marginal distributions [ edit ] Given

(

x

,

σ

2

)

∼

N-

Γ

−

1

(

μ

,

λ

,

α

,

β

)

.

{\displaystyle (x,\sigma ^{2})\sim {\text{N-}}\Gamma ^{-1}(\mu ,\lambda ,\alpha ,\beta )\!.}

σ

2

{\displaystyle \sigma ^{2}}

inverse gamma distribution :

σ

2

∼

Γ

−

1

(

α

,

β

)

{\displaystyle \sigma ^{2}\sim \Gamma ^{-1}(\alpha ,\beta )\!}

while

α

λ

β

(

x

−

μ

)

{\displaystyle {\sqrt {\frac {\alpha \lambda }{\beta }}}(x-\mu )}

t distribution with

2

α

{\displaystyle 2\alpha }

[1]

Proof for

λ

=

1

{\displaystyle \lambda =1}

For

λ

=

1

{\displaystyle \lambda =1}

f

(

x

,

σ

2

∣

μ

,

α

,

β

)

=

1

σ

2

π

β

α

Γ

(

α

)

(

1

σ

2

)

α

+

1

exp

(

−

2

β

+

(

x

−

μ

)

2

2

σ

2

)

{\displaystyle f(x,\sigma ^{2}\mid \mu ,\alpha ,\beta )={\frac {1}{\sigma {\sqrt {2\pi }}}}\,{\frac {\beta ^{\alpha }}{\Gamma (\alpha )}}\,\left({\frac {1}{\sigma ^{2}}}\right)^{\alpha +1}\exp \left(-{\frac {2\beta +(x-\mu )^{2}}{2\sigma ^{2}}}\right)}

Marginal distribution over

x

{\displaystyle x}

f

(

x

∣

μ

,

α

,

β

)

=

∫

0

∞

d

σ

2

f

(

x

,

σ

2

∣

μ

,

α

,

β

)

=

1

2

π

β

α

Γ

(

α

)

∫

0

∞

d

σ

2

(

1

σ

2

)

α

+

1

/

2

+

1

exp

(

−

2

β

+

(

x

−

μ

)

2

2

σ

2

)

{\displaystyle {\begin{aligned}f(x\mid \mu ,\alpha ,\beta )&=\int _{0}^{\infty }d\sigma ^{2}f(x,\sigma ^{2}\mid \mu ,\alpha ,\beta )\\&={\frac {1}{\sqrt {2\pi }}}\,{\frac {\beta ^{\alpha }}{\Gamma (\alpha )}}\int _{0}^{\infty }d\sigma ^{2}\left({\frac {1}{\sigma ^{2}}}\right)^{\alpha +1/2+1}\exp \left(-{\frac {2\beta +(x-\mu )^{2}}{2\sigma ^{2}}}\right)\end{aligned}}}

Except for normalization factor, expression under the integral coincides with Inverse-gamma distribution

Γ

−

1

(

x

;

a

,

b

)

=

b

a

Γ

(

a

)

e

−

b

/

x

x

a

+

1

,

{\displaystyle \Gamma ^{-1}(x;a,b)={\frac {b^{a}}{\Gamma (a)}}{\frac {e^{-b/x}}{{x}^{a+1}}},}

with

x

=

σ

2

{\displaystyle x=\sigma ^{2}}

a

=

α

+

1

/

2

{\displaystyle a=\alpha +1/2}

b

=

2

β

+

(

x

−

μ

)

2

2

{\displaystyle b={\frac {2\beta +(x-\mu )^{2}}{2}}}

Since

∫

0

∞

d

x

Γ

−

1

(

x

;

a

,

b

)

=

1

,

∫

0

∞

d

x

x

−

(

a

+

1

)

e

−

b

/

x

=

Γ

(

a

)

b

−

a

{\displaystyle \int _{0}^{\infty }dx\Gamma ^{-1}(x;a,b)=1,\quad \int _{0}^{\infty }dxx^{-(a+1)}e^{-b/x}=\Gamma (a)b^{-a}}

∫

0

∞

d

σ

2

(

1

σ

2

)

α

+

1

/

2

+

1

exp

(

−

2

β

+

(

x

−

μ

)

2

2

σ

2

)

=

Γ

(

α

+

1

/

2

)

(

2

β

+

(

x

−

μ

)

2

2

)

−

(

α

+

1

/

2

)

{\displaystyle \int _{0}^{\infty }d\sigma ^{2}\left({\frac {1}{\sigma ^{2}}}\right)^{\alpha +1/2+1}\exp \left(-{\frac {2\beta +(x-\mu )^{2}}{2\sigma ^{2}}}\right)=\Gamma (\alpha +1/2)\left({\frac {2\beta +(x-\mu )^{2}}{2}}\right)^{-(\alpha +1/2)}}

Substituting this expression and factoring dependence on

x

{\displaystyle x}

f

(

x

∣

μ

,

α

,

β

)

∝

x

(

1

+

(

x

−

μ

)

2

2

β

)

−

(

α

+

1

/

2

)

.

{\displaystyle f(x\mid \mu ,\alpha ,\beta )\propto _{x}\left(1+{\frac {(x-\mu )^{2}}{2\beta }}\right)^{-(\alpha +1/2)}.}

Shape of generalized Student's t-distribution is

t

(

x

|

ν

,

μ

^

,

σ

^

2

)

∝

x

(

1

+

1

ν

(

x

−

μ

^

)

2

σ

^

2

)

−

(

ν

+

1

)

/

2

{\displaystyle t(x|\nu ,{\hat {\mu }},{\hat {\sigma }}^{2})\propto _{x}\left(1+{\frac {1}{\nu }}{\frac {(x-{\hat {\mu }})^{2}}{{\hat {\sigma }}^{2}}}\right)^{-(\nu +1)/2}}

Marginal distribution

f

(

x

∣

μ

,

α

,

β

)

{\displaystyle f(x\mid \mu ,\alpha ,\beta )}

2

α

{\displaystyle 2\alpha }

f

(

x

∣

μ

,

α

,

β

)

=

t

(

x

|

ν

=

2

α

,

μ

^

=

μ

,

σ

^

2

=

β

/

α

)

{\displaystyle f(x\mid \mu ,\alpha ,\beta )=t(x|\nu =2\alpha ,{\hat {\mu }}=\mu ,{\hat {\sigma }}^{2}=\beta /\alpha )}

In the multivariate case, the marginal distribution of

x

{\displaystyle \mathbf {x} }

multivariate t distribution :

x

∼

t

2

α

(

μ

,

β

α

V

)

{\displaystyle \mathbf {x} \sim t_{2\alpha }({\boldsymbol {\mu }},{\frac {\beta }{\alpha }}\mathbf {V} )\!}

Suppose

(

x

,

σ

2

)

∼

N-

Γ

−

1

(

μ

,

λ

,

α

,

β

)

.

{\displaystyle (x,\sigma ^{2})\sim {\text{N-}}\Gamma ^{-1}(\mu ,\lambda ,\alpha ,\beta )\!.}

Then for

c

>

0

{\displaystyle c>0}

(

c

x

,

c

σ

2

)

∼

N-

Γ

−

1

(

c

μ

,

λ

/

c

,

α

,

c

β

)

.

{\displaystyle (cx,c\sigma ^{2})\sim {\text{N-}}\Gamma ^{-1}(c\mu ,\lambda /c,\alpha ,c\beta )\!.}

Proof: To prove this let

(

x

,

σ

2

)

∼

N-

Γ

−

1

(

μ

,

λ

,

α

,

β

)

{\displaystyle (x,\sigma ^{2})\sim {\text{N-}}\Gamma ^{-1}(\mu ,\lambda ,\alpha ,\beta )}

c

>

0

{\displaystyle c>0}

Y

=

(

Y

1

,

Y

2

)

=

(

c

x

,

c

σ

2

)

{\displaystyle Y=(Y_{1},Y_{2})=(cx,c\sigma ^{2})}

Y

{\displaystyle Y}

(

y

1

,

y

2

)

{\displaystyle (y_{1},y_{2})}

1

/

c

2

{\displaystyle 1/c^{2}}

N-

Γ

−

1

(

μ

,

λ

,

α

,

β

)

{\displaystyle {\text{N-}}\Gamma ^{-1}(\mu ,\lambda ,\alpha ,\beta )}

(

y

1

/

c

,

y

2

/

c

)

{\displaystyle (y_{1}/c,y_{2}/c)}

Y

{\displaystyle Y}

(

y

1

,

y

2

)

{\displaystyle (y_{1},y_{2})}

f

Y

(

y

1

,

y

2

)

=

1

c

2

λ

2

π

y

2

/

c

β

α

Γ

(

α

)

(

1

y

2

/

c

)

α

+

1

exp

(

−

2

β

+

λ

(

y

1

/

c

−

μ

)

2

2

y

2

/

c

)

=

λ

/

c

2

π

y

2

(

c

β

)

α

Γ

(

α

)

(

1

y

2

)

α

+

1

exp

(

−

2

c

β

+

(

λ

/

c

)

(

y

1

−

c

μ

)

2

2

y

2

)

.

{\displaystyle f_{Y}(y_{1},y_{2})={\frac {1}{c^{2}}}{\frac {\sqrt {\lambda }}{\sqrt {2\pi y_{2}/c}}}\,{\frac {\beta ^{\alpha }}{\Gamma (\alpha )}}\,\left({\frac {1}{y_{2}/c}}\right)^{\alpha +1}\exp \left(-{\frac {2\beta +\lambda (y_{1}/c-\mu )^{2}}{2y_{2}/c}}\right)={\frac {\sqrt {\lambda /c}}{\sqrt {2\pi y_{2}}}}\,{\frac {(c\beta )^{\alpha }}{\Gamma (\alpha )}}\,\left({\frac {1}{y_{2}}}\right)^{\alpha +1}\exp \left(-{\frac {2c\beta +(\lambda /c)\,(y_{1}-c\mu )^{2}}{2y_{2}}}\right).\!}

The right hand expression is the PDF for a

N-

Γ

−

1

(

c

μ

,

λ

/

c

,

α

,

c

β

)

{\displaystyle {\text{N-}}\Gamma ^{-1}(c\mu ,\lambda /c,\alpha ,c\beta )}

(

y

1

,

y

2

)

{\displaystyle (y_{1},y_{2})}

Normal-inverse-gamma distributions form an exponential family with natural parameters

θ

1

=

−

λ

2

{\displaystyle \textstyle \theta _{1}={\frac {-\lambda }{2}}}

θ

2

=

λ

μ

{\displaystyle \textstyle \theta _{2}=\lambda \mu }

θ

3

=

α

{\displaystyle \textstyle \theta _{3}=\alpha }

θ

4

=

−

β

+

−

λ

μ

2

2

{\displaystyle \textstyle \theta _{4}=-\beta +{\frac {-\lambda \mu ^{2}}{2}}}

T

1

=

x

2

σ

2

{\displaystyle \textstyle T_{1}={\frac {x^{2}}{\sigma ^{2}}}}

T

2

=

x

σ

2

{\displaystyle \textstyle T_{2}={\frac {x}{\sigma ^{2}}}}

T

3

=

log

(

1

σ

2

)

{\displaystyle \textstyle T_{3}=\log {\big (}{\frac {1}{\sigma ^{2}}}{\big )}}

T

4

=

1

σ

2

{\displaystyle \textstyle T_{4}={\frac {1}{\sigma ^{2}}}}

[ edit ] Measures difference between two distributions.

Maximum likelihood estimation [ edit ] This section is empty. You can help by

adding to it .

(July 2010 )

Posterior distribution of the parameters [ edit ] See the articles on normal-gamma distribution and conjugate prior .

Interpretation of the parameters [ edit ] See the articles on normal-gamma distribution and conjugate prior .

Generating normal-inverse-gamma random variates [ edit ] Generation of random variates is straightforward:

Sample

σ

2

{\displaystyle \sigma ^{2}}

α

{\displaystyle \alpha }

β

{\displaystyle \beta }

Sample

x

{\displaystyle x}

μ

{\displaystyle \mu }

σ

2

/

λ

{\displaystyle \sigma ^{2}/\lambda }

The normal-gamma distribution is the same distribution parameterized by precision rather than variance

A generalization of this distribution which allows for a multivariate mean and a completely unknown positive-definite covariance matrix

σ

2

V

{\displaystyle \sigma ^{2}\mathbf {V} }

σ

2

{\displaystyle \sigma ^{2}}

normal-inverse-Wishart distribution Denison, David G. T.; Holmes, Christopher C.; Mallick, Bani K.; Smith, Adrian F. M. (2002) Bayesian Methods for Nonlinear Classification and Regression , Wiley. ISBN 0471490369

Koch, Karl-Rudolf (2007) Introduction to Bayesian Statistics (2nd Edition), Springer. ISBN 354072723X

Discrete

with finite with infinite

Continuous

supported on a supported on a supported with support

Mixed

Multivariate Directional Degenerate singular Families

![{\displaystyle \operatorname {E} [x]=\mu }](https://wikimedia.org/api/rest_v1/media/math/render/svg/d60f5921cca1c75d673eb70db395bf3a88f9170f)

![{\displaystyle \operatorname {E} [\sigma ^{2}]={\frac {\beta }{\alpha -1}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b74baba053fd81d56d62de618558ac7af62ade55)

![{\displaystyle \operatorname {Var} [x]={\frac {\beta }{(\alpha -1)\lambda }}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c11eddb529a936912263edfb0c46ce2a42adfbd5)

![{\displaystyle \operatorname {Var} [\sigma ^{2}]={\frac {\beta ^{2}}{(\alpha -1)^{2}(\alpha -2)}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4d089f3b7da4ce1f13940b4731eb531932850d0e)

![{\displaystyle \operatorname {Cov} [x,\sigma ^{2}]=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/df7006f5738ee174c6c35e1694f1c4ac3b2c9c42)